During the past couple of years, the use of artificial intelligence (AI) and deep learning (DL) across all sectors has increased exponentially. Not a day goes by that a new AI-based product or service isn’t hitting the market.

As the market for smart devices grows—be it a robotic vacuum cleaner or a home automation system—building high-quality yet affordable products relies heavily on the quality of the datasets used to train computer vision applications.

Democratizing access to high-quality yet affordable synthetic datasets lowers the barrier to entry and, thus, opens up the market to more innovative products serving underserved populations.

Now, when we talk about synthetic datasets in computer vision applications, we’re referring to images created rather than gathered in the real world.

So if we step back and look at the number of images required to train a robotic vacuum cleaner to not only efficiently clean but also recognize objects, people, and pets in a variety of home settings, the number quickly reaches into the thousands and possibly into the millions.

And this is where the very real concern on the democratization of AI and DL comes into play and the argument in favor of synthetic over real-world images quickly moves to center stage.

Why real-world image datasets are not a viable option today

The troves of images required to train a computer-vision application properly can quickly eliminate all but the very large, well-financed companies with deep pockets and category dominance.

A few years ago, I experienced just that. Months were spent gathering and labeling real-world images into datasets and spending millions of dollars along the way, only to discover that slight variable modifications meant starting over. Time and money were lost.

Fast-forward to today, and the playing field is dramatically changing.

As the time to market (TTM) shortens across all categories, it shouldn’t be at the expense of product functionality and affordable costs. For computer-vision-based products reliant on high-quality data, reliance upon real-world image datasets is proving outdated.

If you’re involved in product development, you’ll clearly understand the challenge of gathering enough real-world images corresponding to a specific use-case requirement for proper model training. And this challenge compounds further when privacy issues are taken into account while collecting real-world images.

The latest scandals covered in the media highlight the risks companies face when collecting and outsourcing user data. And this major limitation is compounded further by the cost and time required to label each image manually. In addition, it is almost impossible to modify or adapt real-world images to take into account lighting and camera angle, among other factors.

Even today, collecting and processing real-world images represents the most significant expenditure in the development of a computer-vision application. This high cost (both financial and manpower) is clearly a barrier to entry for small and medium-sized companies. As a result, millions of companies globally are excluded from the growth potential of computer-vision technologies.

As a comparison, imagine today’s internet if the barrier to entry during its growth period in the late ‘90s had been prohibitively costly and limited to only the technology giants. Imagine the impact. The democratization of the internet changed the world while unlocking opportunities for everyone.

How synthetic datasets democratize computer vision innovation

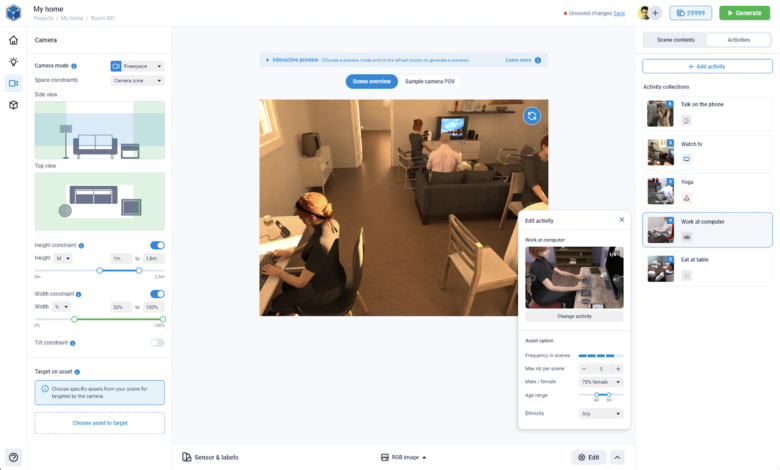

Creating datasets based on synthetic images has several advantages that address the issues mentioned above. First, the economic benefit of synthetic versus real-world images is substantial. The creation of synthetic datasets doesn’t require entire teams or subcontractors to acquire and label images. In fact, an engineer can do everything online in a matter of minutes or hours.

Second, datasets composed of hundreds of thousands of real-world images inherently contain biases that are impossible to evaluate. The balanced distribution of the different image parameters is impossible to control (lighting, objects of interest, camera placement, etc.). Take the example of a person falling and detail all the parameters that need to be captured to correctly generalize the concept of falling. All these imbalances negatively affect the performance of real-world images for training deep-learning models.

On the other hand, the procedural production of synthetic images is based on a well-defined set of parameters involved in creating three-dimensional scenes and configuring virtual sensors.

Therefore, it is possible to control the distribution of these parameters during the production of synthetic images and optimize the coverage of the learning space. This control makes it possible to increase the performance of a synthetic dataset.

Since all the parameters involved in creating synthetic images are under the user’s control, the synthetic dataset can be incrementally modified and adapted easily.

Lastly, synthetic images resolve significant issues of privacy. As iRobot learned, capturing user-generated images and outsourcing labeling quickly leads to misuse and mismanagement of customer data.

Common misconceptions

Often-cited barriers to using synthetic versus real-world datasets are reality gaps, model training reliability, and image realism. These are common misconceptions.

During an intensive benchmarking study conducted in 2021 in collaboration with Inria, the French national research institute for digital science and technology, AI Verse demonstrated that using synthetic datasets for computer vision model training was as effective as model training using real-world images.

The test results demonstrated that image realism is not the most critical element for training computer vision models. In fact, in the benchmark, a reality gap existed between the two different datasets of real-world images tested. And the inherent biases of the real-world image datasets limited their viability also.

The ability to optimize the distribution of synthetic datasets and the possible variation of all the parameters involved in the creation of the images compensate for lower realism and ensure a higher level of model training performance.

In a counterintuitive way, and for the reasons discussed, real-world images are not the optimal data for training deep learning networks on a large scale and for all use cases. Instead, synthetic image datasets offer a more suitable solution: configurable, flexible, fast, inexpensive, and controllable.

And as AI pioneer Andrew Ng notes in the MIT Sloan School of Management article ‘Why it’s time for data-centric artificial intelligence,‘ improving the performance of a network requires optimizing the training data.

Optimization is only possible with synthetic images produced by a procedural engine.